About a year ago, Microsoft introduced a new family of compact AI models with the debut of Phi-3 through Azure AI Foundry. Since then, these smaller language models (SLMs) have found their way into Windows 11, where they continue to receive monthly updates to improve performance and functionality.

Now, the lineup grows even further with the arrival of Phi-4-Reasoning, Phi-4-Reasoning-Plus, and Phi-4-Mini, marking a new phase in the development of smaller, faster, and more capable models.

Smarter Reasoning, Less Overhead

What makes the Phi-4 Reasoning models stand out is their ability to break down complex tasks into logical steps — a process known as multi-step reasoning. They excel in areas like mathematical problem-solving and decision-making workflows, typically reserved for much larger models.

Thanks to advanced training techniques like distillation, reinforcement learning, and the use of curated, high-quality datasets, these models strike a careful balance: small enough to run efficiently on low-power hardware, yet smart enough to tackle sophisticated tasks. This makes them a strong fit for agent-style applications — software that needs to plan, reflect, and adapt dynamically.

Phi-4-Lesoning and Phi-4-Lesoning-Plus

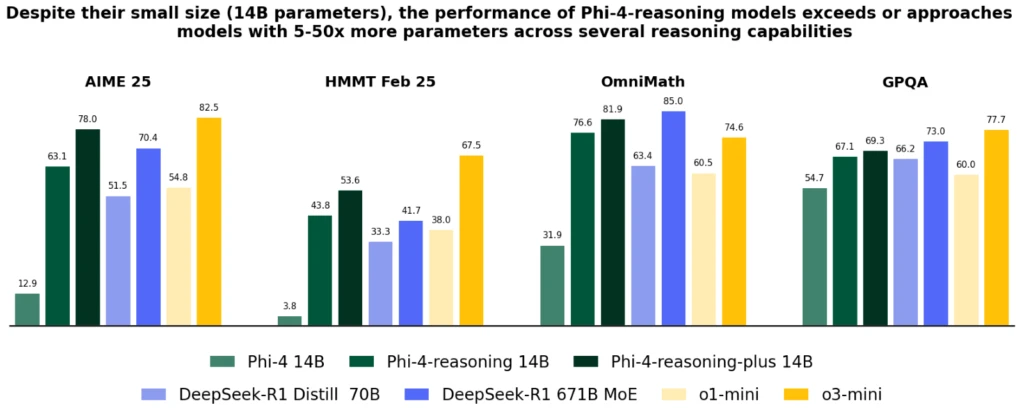

Phi-4-Learning is a streamlined reasoning model built with 14 billion parameters. Despite its smaller size, it competes with much larger systems when it comes to solving complex problems. It was carefully refined using a select set of reasoning examples, originally developed from OpenAI’s O3-Mini. This training method helps the model build clear, step-by-step logic, especially during inference when more processing power is used.

One of the key strengths of Phi-4-Lesoning is its ability to perform well thanks to high-quality, curated training data—both real and synthetically generated. This attention to detail during development allows it to deliver results comparable to much larger models.

Phi-4-Lesoning-Plus

Building on the foundation of Phi-4-Learning, the Plus version goes a step further. It incorporates reinforcement learning, enabling it to handle even more detailed analysis by using about 1.5 times more input during processing. This upgrade leads to higher precision, especially on tasks that demand deeper reasoning.

Despite being far more compact, both versions of the Phi-4 model consistently outperform larger systems like OpenAI’s O1-Mini and Depeek-R1-Distill-LLaMA-70B on a variety of benchmark tests. These include challenging areas such as mathematical problem-solving and advanced academic questions.

Both models are currently accessible through Azure AI Studio and Hugging Face, making them available for use in research and development settings.

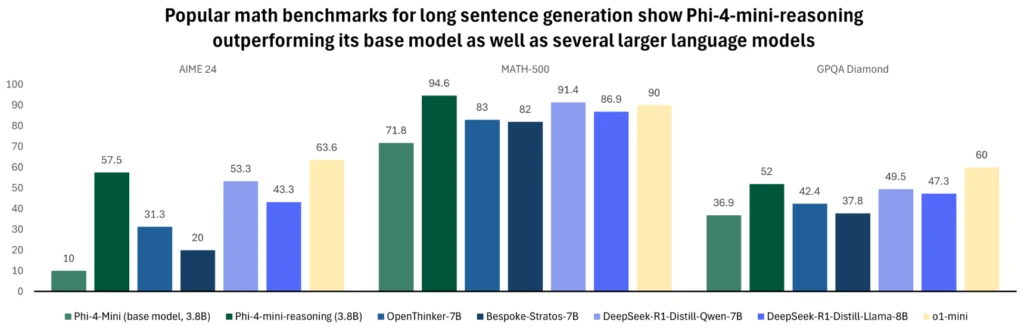

Phi-4-mini-lazas

Phi-4-Mini-Lazas was developed to deliver advanced reasoning in a smaller, faster package. This transformer-based language model is tailored for solving complex math problems, offering accurate, step-by-step solutions—even in situations where computing power or response time is limited.

Trained on over a million diverse math challenges—ranging from high school exercises to doctoral-level problems—the model uses synthetic data generated by Deepseek-R1. The result is a balanced system that maintains strong reasoning skills without demanding heavy resources.

Thanks to its efficiency, Phi-4-Mini-Lazas fits perfectly in educational tools, digital tutors, and lightweight applications. It’s especially well-suited for mobile devices or edge computing, where hardware constraints are common.

Over the past year, the Phi model family has steadily improved, pushing the limits of what compact models can do. New versions have been introduced to meet a variety of needs, including running directly on Windows 11 devices. These models are optimized to perform locally on both CPUs and GPUs, making them more accessible across a wide range of systems.

As Windows develops its next generation of personal computers, Phi models are playing a key role. One such example is the Phi-Silica variant, which is fine-tuned for Neural Processing Units (NPUs). This version is tightly integrated with the PHI operating system, designed to stay ready in memory for instant use.

Its lightweight footprint, fast response times, and efficient power use make it an ideal companion for apps running directly on your PC. Whether it’s helping students learn or enabling smart productivity tools, Phi models are shaping a new class of intelligent, responsive computing.