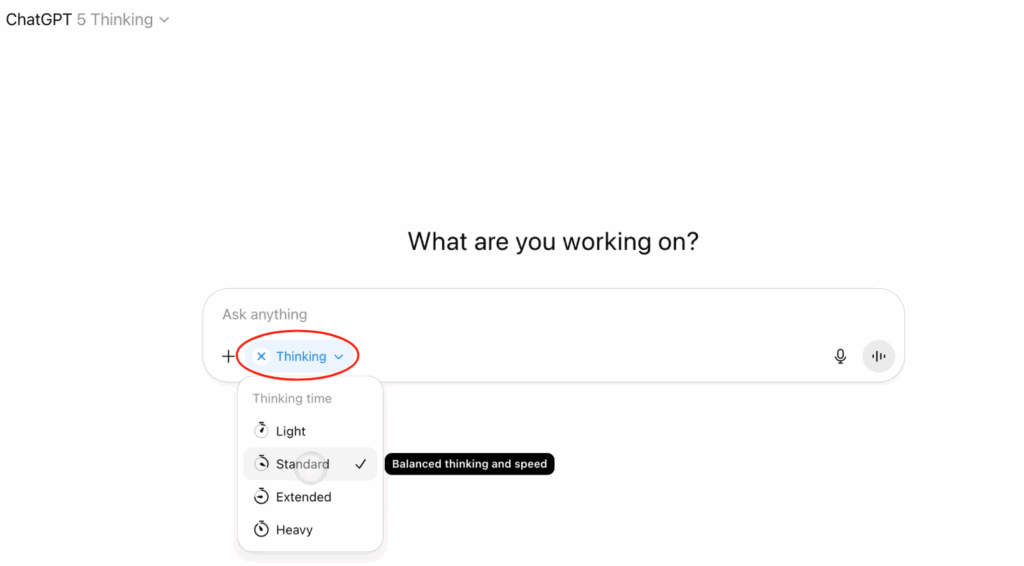

OpenAI has introduced new controls for the Thinking mode in GPT-5, a feature designed for handling more demanding queries. With the update, users can now decide how quickly the system should respond and how much time it spends processing before delivering an answer.

Normally, GPT-5 relies on its standard setup to provide quick replies. For more complex tasks, however, the Thinking mode breaks down the request into stages, weighs different possibilities, and takes extra time to return a more refined response.

According to OpenAI, the change stems directly from user feedback requesting faster and more flexible interactions.

What’s different now?

When activating Thinking mode, you’ll see two new options:

- Standard: focuses on quicker answers while trying to balance depth and speed.

- Extended: digs deeper into the problem, taking longer but aiming for more thorough results—ideal for complex queries.

These controls are available only to Plus and Business subscribers. Free users won’t see the latency adjustment, while Pro plan subscribers continue to use the existing “light” (faster) and “heavy” (slower but deeper) labels.

Interestingly, GPT-5 was originally built to make this decision on its own—automatically picking between speed and depth depending on the request.

However, that approach drew widespread criticism from the community, a point even acknowledged by OpenAI’s CEO Sam Altman. As a result, the platform now gives users the choice, offering Fast, Thinking, and Auto modes so they can decide how the assistant handles each query.