The global technology sector is entering a phase where computing capacity has become a defining measure of competitiveness. As demand for artificial intelligence and large-scale data processing accelerates, even the world’s most valuable companies are facing soaring operational expenses.

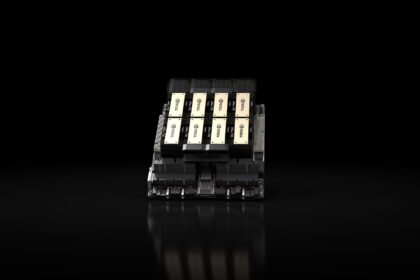

Against this backdrop, Microsoft has introduced its Maia 200 chip, a processor designed to rein in the escalating costs of running modern data centres while delivering higher efficiency.

According to industry sources cited by Windows Central, the launch of Maia 200 goes beyond a routine hardware upgrade. It represents a calculated response to the financial strain associated with training and operating large language models. Maintaining these systems requires enormous amounts of computing power, a burden that has raised concerns across the sector.

Reports suggest that OpenAI, a key Microsoft partner, could face losses approaching $14 billion by 2026 if current cost trends continue. The new chip, built on a 3-nanometer process by Taiwan Semiconductor Manufacturing Company (TSMC), aims to slow what many describe as excessive spending across AI development.

Designed to Outperform Rivals

Microsoft says the Maia 200 stands apart not only for its processing power but also for how it handles heavy, sustained workloads. The company claims it delivers the highest performance among processors developed by major cloud service providers.

Internal benchmarks indicate that the chip delivers up to three times the performance of Amazon’s third-generation Trainium processor and exceeds Google’s seventh-generation Tensor Processing Unit in select artificial intelligence data formats.

The company estimates that Maia 200 improves performance per dollar by roughly 30 per cent compared with the previous generation of hardware. This metric is critical, as the cost of generating each AI response directly affects the commercial viability of services such as Copilot and ChatGPT.

By lowering per-query expenses, Microsoft hopes to establish a more stable and scalable business model, reducing reliance on constant infusions of external capital.

Rollout Plans and Environmental Focus

Beyond performance gains, Microsoft has emphasised sustainability as a core element of the Maia 200 project. Scott Guthrie, Executive Vice President of Cloud and AI, highlighted the chip’s use of a closed-loop water cooling system. Unlike traditional air-based cooling, the design is intended to eliminate water waste, addressing long-standing environmental concerns surrounding large data center operations.

Deployment of the Maia 200 has already begun at Microsoft facilities in Iowa, with Arizona and other regions expected to follow. The processor is set to support future generations of advanced AI models, including upcoming iterations from OpenAI.

By combining cost efficiency, competitive performance, and reduced environmental impact, Microsoft is positioning the Maia 200 as a cornerstone of its long-term artificial intelligence strategy.