Customers ordering certain high-memory configurations of Apple Mac devices are encountering extended delivery times, as demand rises for systems capable of running advanced artificial intelligence workloads locally.

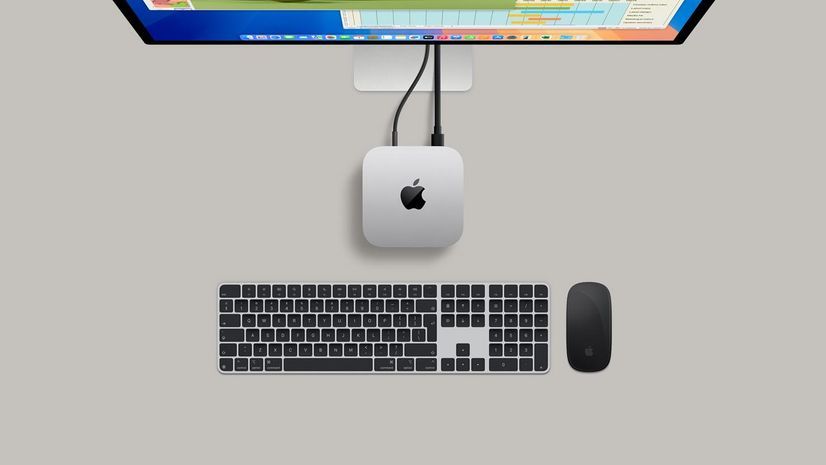

While standard versions of the MacBook Air, Mac mini, and iMac remain widely available for same-day or quick delivery, orders that include upgraded Unified Memory are now seeing shipping delays of up to three weeks. In some high-end configurations, wait times stretch even further.

Local AI is driving new buying behavior

Industry observers link the surge in demand to the growing popularity of locally run AI tools, particularly large open-source agents such as OpenClaw. Running advanced models — especially those with tens of billions of parameters — requires massive memory capacity that typical consumer GPUs cannot handle efficiently.

For example, a 70-billion parameter model running in FP16 format may require roughly 140GB of memory just to load its weights. Traditional multi-GPU setups can theoretically meet the requirement but often suffer from performance limitations due to PCIe bandwidth constraints.

Apple’s Unified Memory architecture offers a different approach. Because the CPU, GPU, and neural engine share a single memory pool, data does not need to move across slower interconnects. This design has made high-memory Macs increasingly attractive to developers, researchers, and enthusiasts looking to run large AI models locally.

The impact is most visible in premium configurations. Systems such as MacBook Pro and Mac mini models with expanded memory options now face two-to-three-week delivery windows. At the extreme end, a Mac Studio powered by the M3 Ultra with 512GB of Unified Memory may take five to six weeks to arrive.

Despite speculation about AI-driven demand, the delays are not attributable to a single factor. Apple has previously acknowledged that it is working to secure a sufficient supply of memory amid strong overall demand, as the global semiconductor market continues to feel pressure from AI infrastructure expansion.

Large-scale AI deployments from cloud providers and enterprise buyers have largely driven memory shortages. However, growing interest from individual users seeking robust local AI systems is adding additional pressure to the supply chain.