U.S. President Donald Trump said that while American technology companies are leading the global race in artificial intelligence, the soaring electricity demands of large-scale data centers should not result in higher power bills for ordinary citizens.

In a post on his Truth Social platform, Trump stated that the White House is collaborating with major U.S. technology firms to ensure continued AI development without passing the cost of increased electricity consumption onto households and small businesses. He argued that limitations in the national power grid should not become a financial burden for consumers.

According to Trump, Microsoft will be the first firm to engage directly with the administration on the issue. He said discussions with the Redmond-based company have already led to changes that will begin this week. Those measures, Trump claimed, are intended to prevent Americans from “picking up the tab” for the power consumption of large AI data centers through higher utility bills.

Trump said Microsoft has announced a five-point plan focused on what it describes as “community-first AI infrastructure,” emphasising that major technology companies must finance the energy demands of their own facilities. “Data centers are key to the AI boom and to keeping Americans free and secure,” Trump wrote, “but the big technology companies who build them must pay their own way.”

Power grid strain intensifies

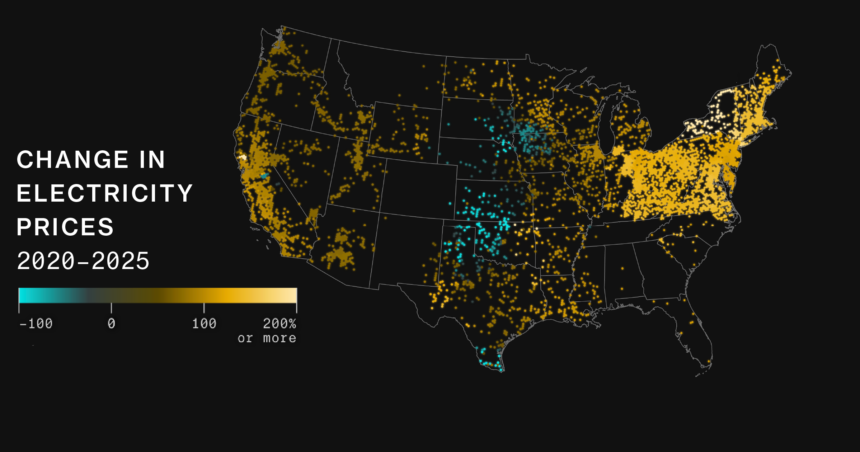

The rapid expansion of AI infrastructure has placed growing pressure on the U.S. electricity system. Building new power plants and expanding transmission networks often takes many years, leaving utilities struggling to keep pace with demand.

In several states, the arrival of large multi-megawatt and multi-gigawatt data centre projects has contributed to electricity price increases of up to 36 per cent, according to industry estimates.

Those increases have had the greatest impact on residential customers and small businesses—groups that typically benefit the least from the AI boom but bear a disproportionate share of rising energy costs.

Concerns about power availability have been echoed by industry leaders. Mark Zuckerberg, founder and CEO of Meta Platforms, stated in mid-2024 that electricity supply would become the biggest constraint on AI growth once shortages of GPUs begin to ease.

While the tech sector is also facing ongoing memory shortages expected to persist until 2028, analysts note that expanding power generation and grid capacity is far more complex and time-consuming.

Temporary fixes, long-term impact

To keep projects on schedule, some AI hyperscalers—including firms such as OpenAI and xAI—have turned to on-site power generation to run their facilities before full grid connections are approved. These stopgap solutions enable thousands of GPUs to come online quickly, but they are only temporary. Once the data centers are connected to local utilities, they still add substantial demand to regional power systems.

The issue has also drawn attention from lawmakers. Democrats in the U.S. Senate have pressed companies such as Amazon and Google for explanations over rising residential energy costs linked to data center expansion.

Balancing AI leadership and consumer costs

With pressure coming from both the White House and Congress, the technology sector faces increasing scrutiny over how it manages the energy footprint of AI. The administration’s message is clear: the United States intends to remain competitive in artificial intelligence, but not at the expense of higher electricity bills for everyday Americans.

Whether coordinated action between government and industry can rein in costs while sustaining the AI buildout remains an open question. For now, the debate highlights a growing reality of the AI era—computing power is no longer just a technical challenge, but also a national infrastructure and cost-of-living issue.