Nearly a year after previewing its next-generation Trainium AI accelerator, Amazon Web Services has officially released Trainium3, marking a major leap in the company’s custom-silicon roadmap. The chip now powers the newly available EC2 Trn3 UltraServers, unveiled during AWS re:Invent and positioned as a flagship option for large-scale AI training inside the Elastic Compute Cloud platform.

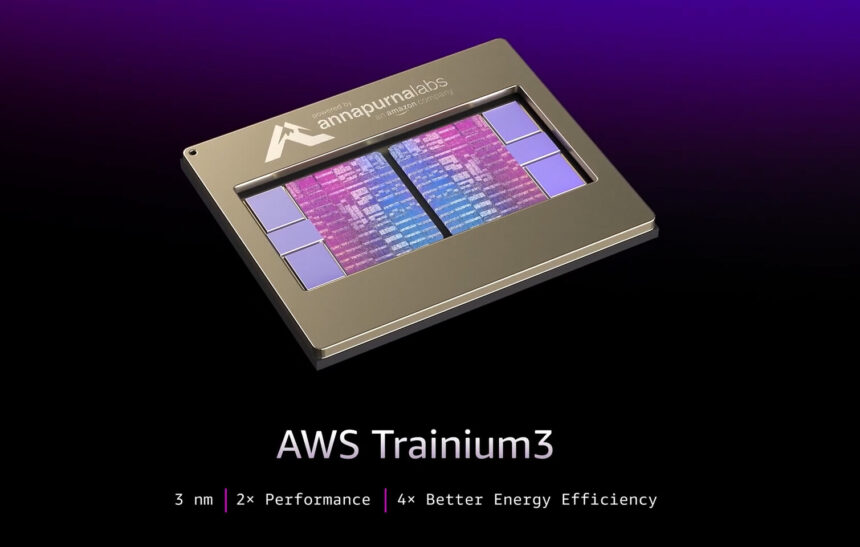

Trainium3: A 3-nm Accelerator Built for Modern AI Models

Manufactured by TSMC on a 3-nm process, Trainium3 delivers:

- 2.52 PFLOPs of FP8 compute per chip

- 144 GB of HBM3e memory

- 4.9 TB/s of memory bandwidth

AWS attributes these gains to substantial architectural updates aimed at balancing compute, memory, and data movement—critical for training today’s LLMs, mixture-of-experts architectures, and multimodal systems.

The new accelerator expands its supported formats to include FP32, BF16, MXFP8, and MXFP4, and strengthens hardware support for structured sparsity, micro-scaling, stochastic rounding, and enhanced collective communication engines.

UltraServer Deployment: Where Trainium3’s Real Power Shows

While the chip itself brings notable upgrades, AWS emphasizes that Trainium3’s biggest advantages appear at system scale. A full Trn3 UltraServer integrates:

- 144 Trainium3 chips

- 362 PF8 PFLOPs of aggregate compute

- 20.7 TB of HBM3e

- 706 TB/s of memory bandwidth

Based on internal testing shared by AWS, this platform delivers:

- 4.4× more compute performance

- 4× higher energy efficiency

- Nearly 4× more memory bandwidth

…compared with Trainium2-based systems.

A key component of this uplift is NeuronSwitch-v1, a new all-to-all interconnect that doubles inter-chip bandwidth relative to previous UltraServers. Latency improvements are achieved through an upgraded Neuron Fabric, which enables chip-to-chip communication with latency under 10 microseconds. Meanwhile, EC2 UltraClusters 3.0 provide multi-petabit networking for training jobs spanning “hundreds of thousands of Trainium chips.”

Performance Gains: Internal Workloads See Significant Speedups

AWS says Trainium3 is engineered to reduce data-movement bottlenecks that hinder large transformers and MoE models—especially those with extended context windows or multimodal components. Internal testing using OpenAI’s GPT-OSS showed:

- 3× higher throughput per chip

- 4× faster inference latency

These results reflect optimizations at both the hardware and system levels.

Early Customers Report Up to 50% Lower Training Costs

Several companies are already using Trainium3 in production:

- Anthropic, Metagenomi, and Neto.ai report cost reductions up to 50% compared with alternative hardware.

- Amazon Bedrock is running production workloads on the new accelerator.

- AI video startup Decart is using Trainium3 for real-time generative video, achieving 4× faster frame generation at half the cost of GPUs.

The early customer feedback positions Trainium3 as AWS’s strongest play yet in the accelerating AI-infrastructure market.

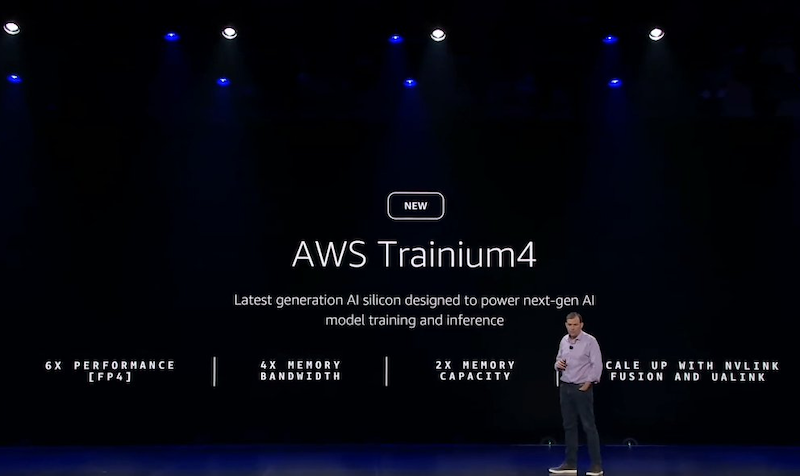

Trainium4 Announced: A Major Performance Jump Planned

Alongside the Trainium3 debut, AWS CEO Matt Garman briefly previewed Trainium4, the next major accelerator in the lineup. AWS says the upcoming chip will deliver:

- 6× the FP4 throughput

- 3× the FP8 performance

- 4× the memory bandwidth

AWS calls the FP8 performance uplift a “foundational leap,” indicating that the chip may allow organizations to train models three times faster or serve triple the inference workload.

Notably, Trainium4 will support Nvidia’s NVLink Fusion interconnect. This means upcoming AWS racks will be able to house both GPU and Trainium systems in common MGX-based rack designs, enabling more flexible deployment strategies.

A Roadmap Focused on System-Scale AI

With Trainium3 entering full production and Trainium4 already in development, AWS is clearly preparing for a future where AI performance is dictated not only by accelerator speed, but by networking and system architecture at massive scale. The company’s ability to execute on this strategy will shape its standing in the increasingly competitive race to build infrastructure for frontier-level AI.

As AWS expands its silicon portfolio, the battle between cloud providers for AI dominance is entering a new phase—and Trainium3 signals that AWS intends to compete at the highest tier.