OpenAI’s long-running legal struggle over copyright and AI training data has entered a more serious and consequential phase. After years of lawsuits from publishers and news organisations accusing the company of using copyrighted material without permission or payment, a U.S. federal court has now ordered OpenAI to produce up to 20 million ChatGPT chat logs as part of its ongoing confrontation with The New York Times.

The ruling marks one of the most significant developments yet in the broader debate over whether training AI models on publicly available—but copyrighted—content constitutes fair use.

A Growing Wave of Copyright Lawsuits

For several years, OpenAI has been the target of multiple copyright infringement lawsuits brought by media outlets, authors, and publishers. At the centre of these cases is a common allegation: that ChatGPT was trained on copyrighted journalism without authorisation, licensing agreements, or compensation.

Among all the lawsuits, The New York Times v. OpenAI and Microsoft stands out as the most prominent. Filed in late 2023, the suit argues that OpenAI unlawfully used Times articles to train its large language models and that ChatGPT can reproduce or closely summarize protected content, undermining the publisher’s business model.

OpenAI has repeatedly denied wrongdoing, maintaining that its training process relies on publicly available data and is protected under the doctrine of fair use.

OpenAI’s Mixed Track Record in Court

Until recently, OpenAI had enjoyed relative success defending against these claims. In 2024, a federal judge dismissed a copyright infringement lawsuit filed by Raw Story and AlterNet, ruling that the plaintiffs failed to demonstrate:

- exactly what copyrighted material ChatGPT was trained on, and

- how that material was directly used without transformation

The ruling reinforced OpenAI’s argument that generalized claims about scraping internet content are insufficient on their own to prove copyright infringement.

But the New York Times case has proven more difficult to dismiss.

Court Orders Release of 20 Million Chat Logs

In a ruling that could shape the future of AI litigation, U.S. Magistrate Judge Ona Wang, presiding in Manhattan, ordered OpenAI to produce 20 million ChatGPT interaction logs. The logs are intended to help determine whether ChatGPT generates responses that reproduce copyrighted Times content in a way that would support the publisher’s claims.

OpenAI strongly objected to the order, arguing that it would:

- undermine long-standing user privacy protections

- expose sensitive user conversations

- create an unprecedented discovery burden

However, Judge Wang rejected those arguments, stating that the logs are necessary to evaluate the claims and can be handled under strict safeguards.

“There are multiple layers of protection in this case precisely because of the highly sensitive and private nature of much of the discovery,”

Wang wrote, emphasizing that privacy concerns could be mitigated.

The court concluded that producing the logs would not violate ChatGPT users’ privacy, given anonymization and protective orders.

What the Judges Are Really Examining

The legal core of the case goes beyond metadata or copyright notices. As U.S. Federal Judge Colleen McMahon explained in earlier commentary related to similar disputes, the key issue is not technical metadata removal but the alleged uncompensated use of journalistic content to build ChatGPT.

“The alleged injury for which Plaintiffs truly seek redress is not the exclusion of CMI but the use of Plaintiffs’ articles to develop ChatGPT without compensation.”

In other words, the court is focused on whether AI training itself constitutes a form of economic harm that current copyright law is not yet equipped to address.

OpenAI Appeals the Order

OpenAI has already filed an appeal seeking to overturn Magistrate Judge Wang’s ruling. The appeal will be reviewed by U.S. District Judge Sidney Stein, who now must decide whether the discovery order stands or is modified.

This procedural move reflects how high the stakes are for OpenAI. If the logs reveal frequent reproduction or close paraphrasing of protected articles, the outcome could reshape not only this case but also future AI development practices.

Industry Pushback and Public Criticism

The ruling has prompted sharp reactions from the media industry. Frank Pine, executive editor at MediaNews Group, accused OpenAI’s leadership of acting in bad faith:

“OpenAI’s leadership was hallucinating when they thought they could get away with withholding evidence about how their business model relies on stealing from hardworking journalists.”

Meanwhile, OpenAI CEO Sam Altman has continued to defend the company’s position, arguing that copyright law does not categorically prohibit the use of protected works for training AI. Altman has also openly acknowledged that building models like ChatGPT without copyrighted material may be practically impossible, highlighting the tension between innovation and existing legal frameworks.

Why This Case Matters for the Future of AI

The New York Times lawsuit comes at a moment when the AI industry faces mounting challenges:

- concerns about running out of high-quality training data

- increased scrutiny from regulators worldwide

- growing pressure from content creators

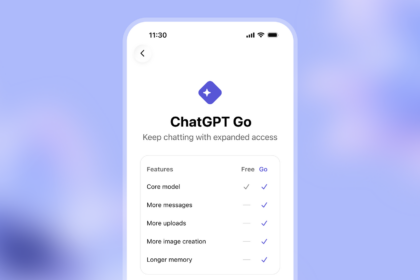

- reports that AI companies may introduce ads into ChatGPT to offset rising costs

If the court ultimately rules against OpenAI—or narrows the scope of fair use—it could force AI developers to fundamentally rethink how models are trained, licensed, and monetized.

As the case moves forward, the outcome could set a precedent for how courts interpret copyright law in the age of generative AI. More than just a dispute between one publisher and one AI company, it represents a broader reckoning between legacy media economics and the data-hungry nature of modern AI systems.

Whether OpenAI’s fair-use defense holds—or collapses under scrutiny—may determine how AI models are built, regulated, and paid for in the years ahead.